COLUMBUS, Ohio – November 5, 2025 – 54% of North American retailers surveyed say they …

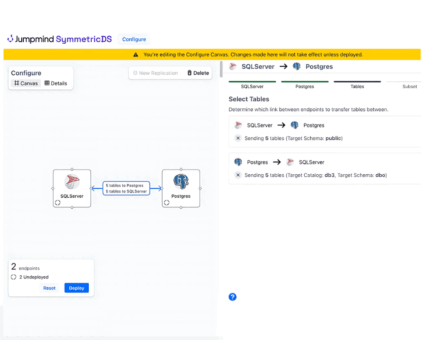

Data Solutions

Data integration software that is truly limitless.

Metl is built on a strong yet flexible foundation to manage data at scale. Filter, transform, configure, and integrate data to solve any challenge that comes your way.