Retailers are constantly looking for new ways to generate revenue and stay competitive. One strategy …

SymmetricDS Transformation Pipeline

Often when setting up data replication it is necessary to redirect or transform the source tables and columns into a different set of target tables and columns. SymmetricDS offers this transformation feature as part of the real time data replication workflow. Below we will review the places within the workflow this transformation can take place and how to configure it.

Overview

Many ETL based tools on the market are great a providing a solution around data manipulation and transformation as part of a scheduled process. However if you need to perform near real time transformations on changes as they occur SymmetricDS can be a powerful asset to continuously perform these transformations as part of the replication process without any additional nightly schedule of batch jobs.

Pipeline

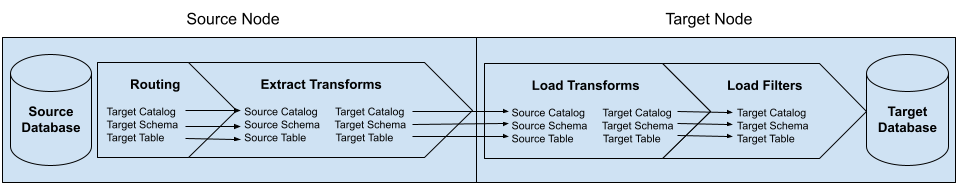

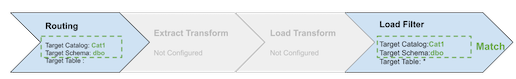

First we need to understand the components that make up the replication pipeline and when they are executed. The image below diagrams all the components that can be potentially used as part of the replication pipeline. In order for these to execute properly though the catalog, schema, and table information configured must match as the batch works through the pipeline. If any of these configuration values (catalog, schema, table) do not line up with the step prior the transforms or filters will not execute.

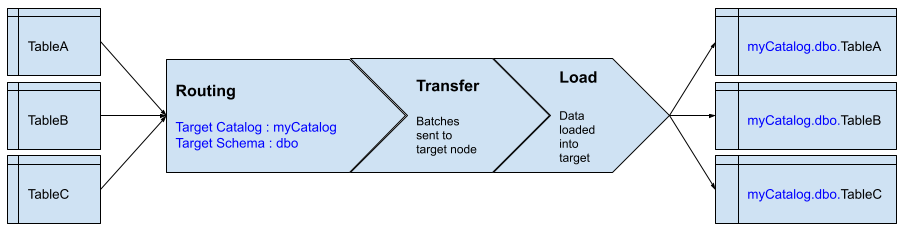

Routing

Routing is the process that checks for newly captured changes and will determine where they need to go by assigning them to a batch. Target catalog, schema, and table information can be set at the router level. This is often the simplest way to change the target information for multiple tables. Setting the target catalog and schema at the router will effect all tables that flow through this router (table routing) and allows a single configuration point.

TIP: Setting target information on the router works well when you just need to change the catalog and/or schema but not the table names. If you need to also transform table names it is better done through transforms.

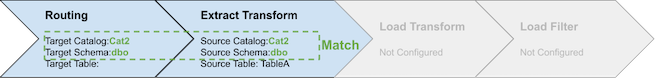

Extract Transforms

These transforms fire on the source if the source catalog, schema, and table match the values that came out of routing. There are 3 ways to hook up an extract transform.

- Routing values are blank for target catalog, schema, and table. Then the extract transform would also have blank entries for source catalog and schema. Source table on the transform could contain a table name or a wildcard (*) to hit all tables.

- Routing has the “use source catalog and schema” set. Then the extract transform source catalog, schema, and table would need to match the values in the trigger (source catalog, schema, and table)

- Routing directly sets any of the values for catalog, schema, or table. Then the extract transform source catalog and schema would need to match these values set on the router.

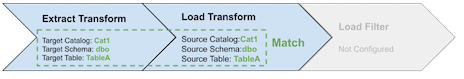

Load Transforms

These transforms fire on the target side if there is a match. There are 2 ways to hook up a load transform

- When an extract transform is present. Then the extract transform target values need to match the load transforms source values.

- No extract transform present. Then the load transform would hook in just like and extract transform following one of the 3 router combinations explained above for the extract transforms.

Load Filters

Load filters must provide a target catalog, schema, and table combination that matches the upstream settings in the pipeline in order to execute. Load filters can provide a variety of customizations on the data once executed. Below is an example of how you could set the target catalog and schema on the target side rather than through a router or transforms. This might be useful if only the target knows where their data should be loaded into (remember routing occurs on the source). Through the use of a wildcard (*) as the target table a single load filter can support all tables rather than setting up a transform for each table.

Example: Beanshell load filter “Before Write” to set the catalog and schema.

// Check that the table is not a SYM_* runtime table

if (!table.getName().startsWith(engine.getTablePrefix())) { table.setCatalog("catalog1");

table.setSchema("dbo");

}

return true;

Example : Similar to one above but the values now are dynamic and could be set in each targets engine properties file (target.catalog and target.schema).

// Check that the table is not a SYM_* runtime tableengine.getParameterService().getString("target.catalog")

if (!table.getName().startsWith(engine.getTablePrefix())) { table.setCatalog();engine.getParameterService().getString("target.schema")

table.setSchema();

}

return true;